Posted by Nodus Labs | April 7, 2022

Sentiment Analysis: AFINN vs Bert AI Algorithms (using the Twitter and Amazon examples)

Sentiment analysis helps understand emotion in text. Most of the models provide a basic categorization: “positive”, “negative”, and “neutral” — which is usually sufficient for gaining an insight into a product or a public discourse.

In this article, we will demonstrate how the different sentiment analysis algorithms perform, using the Amazon and Twitter data (that we obtained from Kaggle). We will be using InfraNodus text network analysis tool to perform sentiment analysis, as it has built-in AFINN and Bert AI machine-learning algorithms, as well as its own text network sentiment analysis module.

AFINN vs Bert AI Algorithms for Sentiment Analysis

There are two main approaches to sentiment analysis.

One is to use a dictionary-based algorithm, such as the AFINN model. Each word in the dictionary is associated with a particular emotion. We tokenize a statement and rate each word with a negative score if it is negative, and a positive score if it is positive. Then we sum up the rating for the statement. The weighed result will show whether a statement has predominantly negative or positive words. Obviously, such analysis is not very precise as it cannot capture the nuances, syntaxis, semantics, and relations inside a text. For instance, if a customer says “At first, I didn’t think this product the right fit for me, and, as it turned out, I was actually right! Maybe some people will like it, but for me, it’s not really worth it.” — the AFINN model will mark it as “positive”, because the words contained inside are all tagged as positive, however, it does not really understand the meaning of the sentence, which is negative for the customer. The upside is that this model is pretty fast.

This is where the newer, machine learning models come into the picture. They are trained on the existing content and can distinguish the nuances that the dictionary models cannot. For instance, the BERT AI model was trained on customer reviews and can provide a prediction on the rating the statement would get (from 1 stars to 5 stars). It can be used to mark statements “negative” (if it got 1 or 2 stars) or “positive” (if it got 4 or 5 stars) or neutral (3 stars). The same sentence above — “At first, I didn’t think this product the right fit for me, and, as it turned out, I was actually right! Maybe some people will like it, but for me, it’s not really worth it.” — will be marked as “negative” by the system, which means it can capture the nuance much better. The downside is that this model may be slower than the dictionary-based ones, the upside is that it can work for multiple language and is much more precise.

Another approach allows us to reveal more nuance than simply the “negative” and the “positive” sentiment. Instead, we analyze all the words contained in a review and try to contextualize them with the rest of the content we have. We will then identify topical clusters: words that tend to be used in the same context. Based on those topical clusters we can better understand what the customers are thinking and feeling when they write a particular statement. This approach can be realized using any co-occurrence algorithms, such as LDA or the newer text network analysis approaches.

All the three methods for sentiment analysis are available in InfraNodus text analysis platform, and below we will demonstrate how they can be used and how they compare.

Sentiment Analysis on Real Data — Amazon Reviews

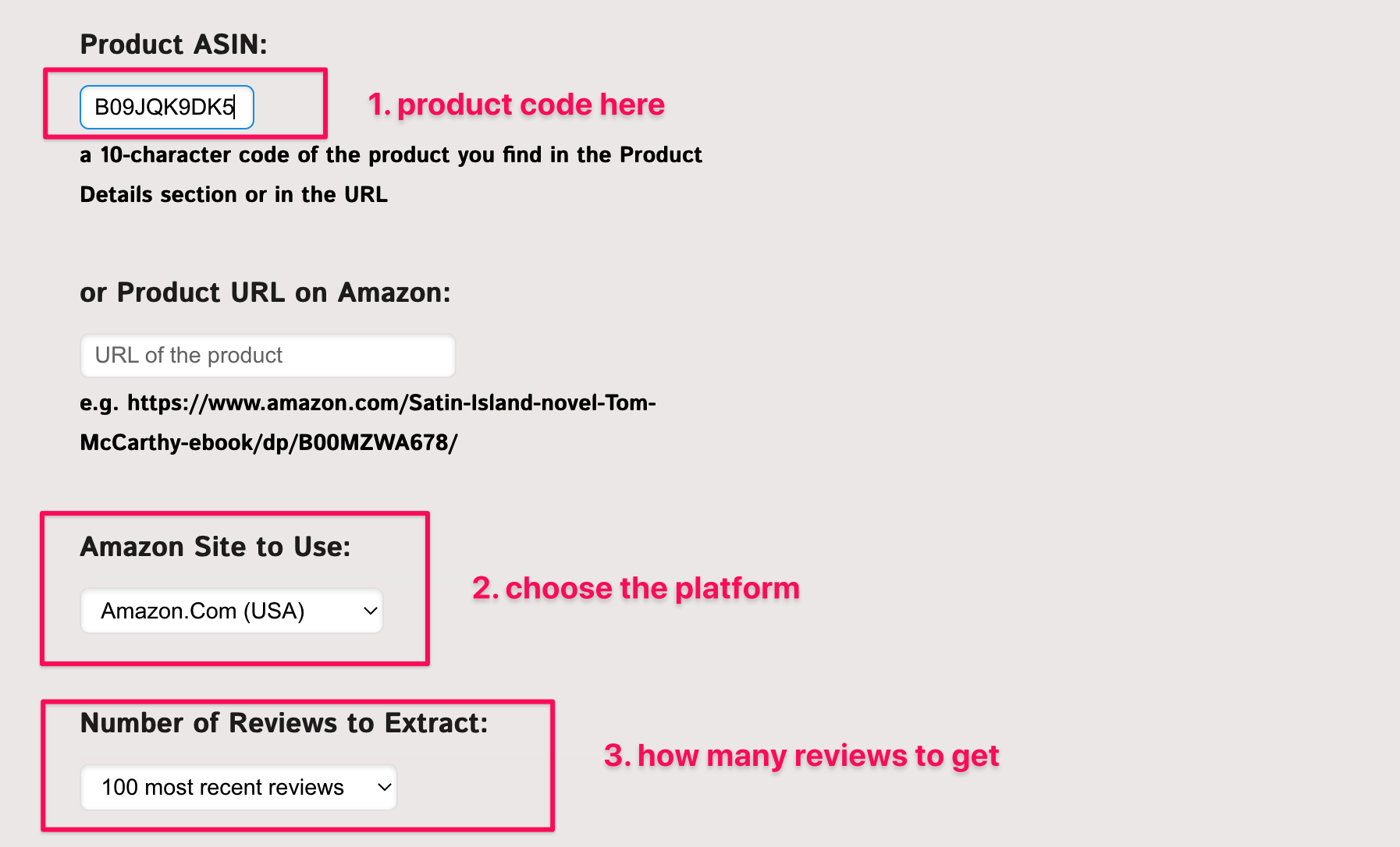

Let’s try this approach on Amazon reviews. Both AFINN and BERT AI models are built-in the sentiment analysis module in InfraNodus. We will use InfraNodus’ Amazon import function to get the reviews of electric tea kettles. Amazon already has the review stars, so we can compare these models against the real data and see if there’s any difference.

First, we choose the ASIN of the product we want to analyze and add it into the Amazon import of InfraNodus. In our case, it’s going to be the 2021 MacBook Pro, M1 processor, 16 inch laptop:

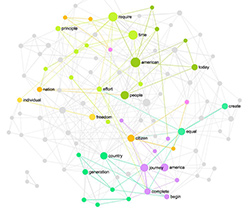

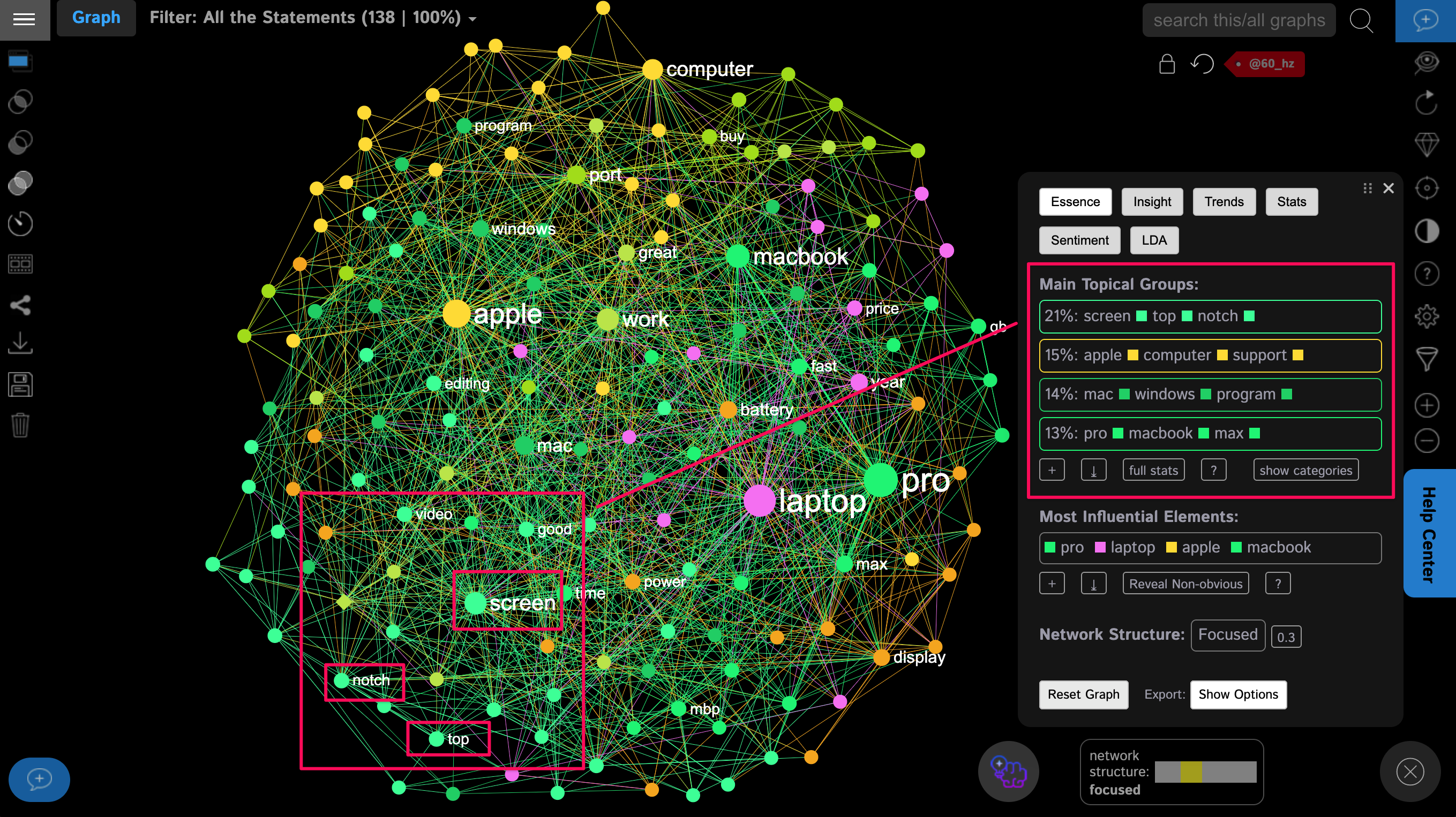

Once we specify all the details and wait for a few seconds for the reviews to upload to InfraNodus, we will get a graph of all the reviews:

InfraNodus represents every unique word – lemma found in every review as a node and every co-occurrence of the words as a connection. Then it builds a graph and applies network analysis algorithms to detect the most influential nodes (shown bigger on the graph) and the topical clusters (shown with the same color). This allows you to see not only which words are used in the reviews, but also in which context.

As we can see, the main topics shown in the analytics panel above and on the graph indicate that the customers who bought Macbook Pro really like its screen: the first topic is “screen, top, notch”. They also talk about the support that is provided. Let’s now see how these reviews compare across the sentiment.

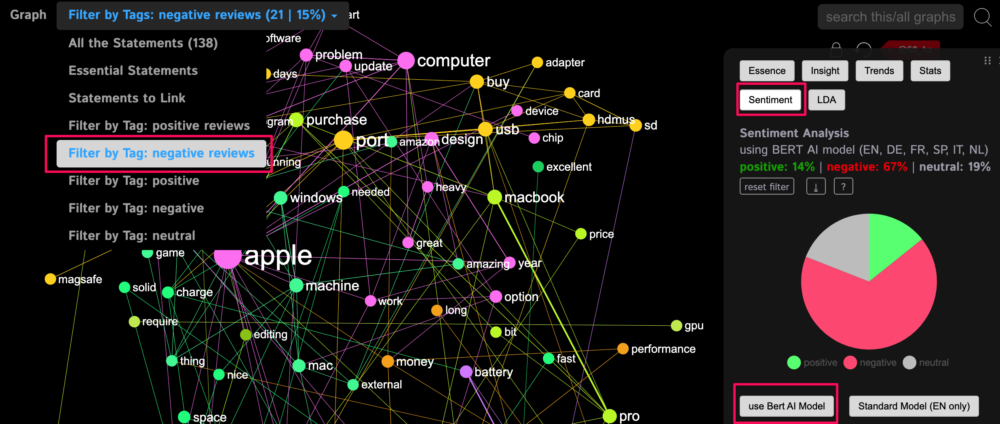

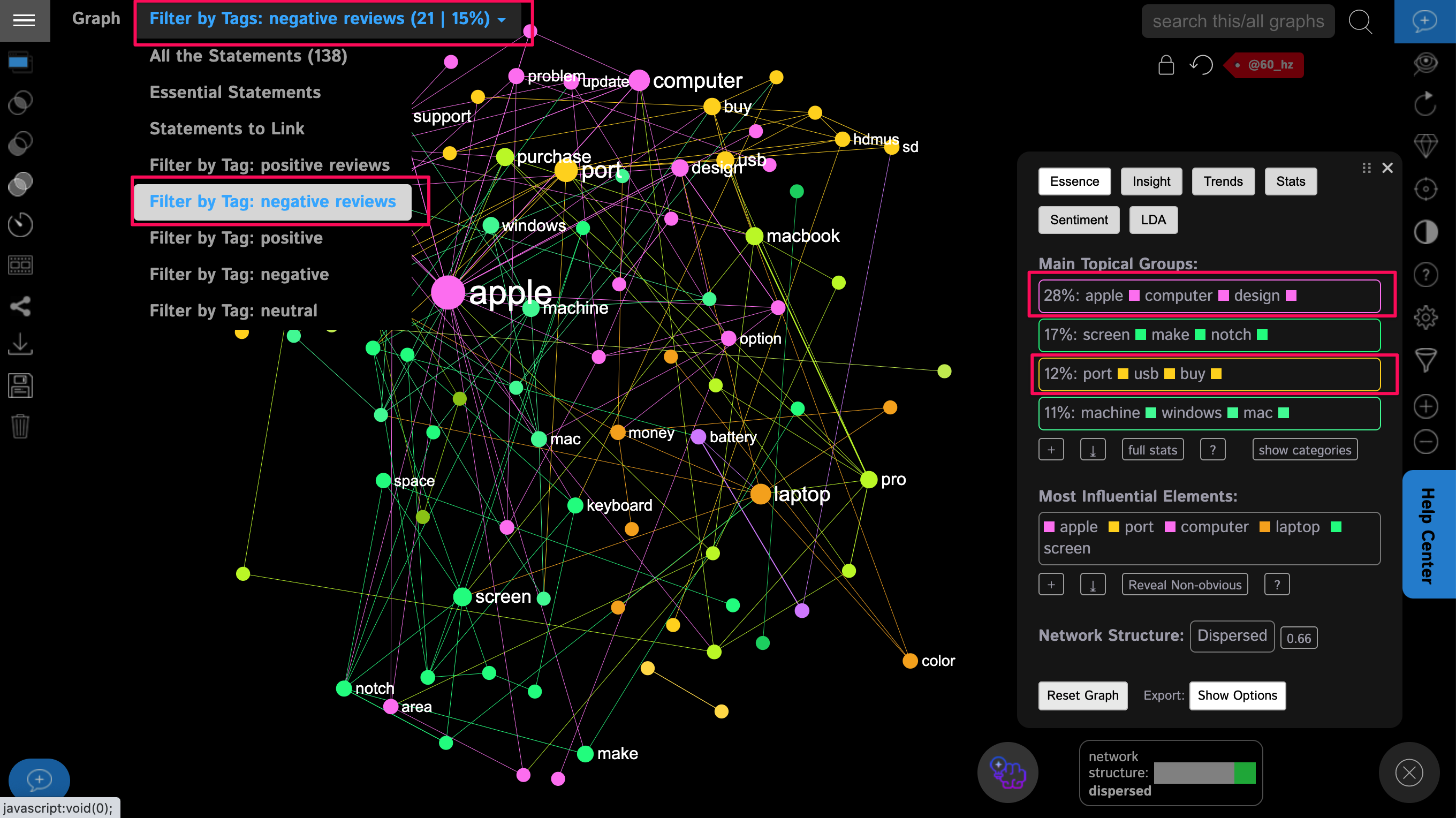

We already have this data in the filter panel at the top. All the 1-, 2-star and 3-star reviews were marked as “negative reviews”, while all the 4- and 5-stars reviews were marked as positive. We can then filter the graph by those reviews and see what people talk about in the negative context:

We can see that the customers who rated the product low are talking about the computer’s design and the USB ports — probably they don’t like the feel of the product and the fact that there are not many different ports. We can further clarify what they mean by clicking on the words on the graph or in the Analytics panel to see the context where those words are being used:

As we suspected, the problem is in the design and the lack of USB ports for newer devices. Apparently, a dongle is needed for compatibility and users don’t like it. They also complain about the overall design and incompatibility issues.

AFINN and BERT AI vs Amazon Ratings

Let us now compare how AFINN and BERT AI perform versus the Amazon’s own ratings. We can use this data to see how precise AFINN and BERT AI models are.

The easiest way to check the precision is to first select all the negative reviews (Amazon’s own rated 1, 2- and 3-stars) and compare them to the results provided by AFINN and Bert AI sentiment analysis modules built into InfraNodus.

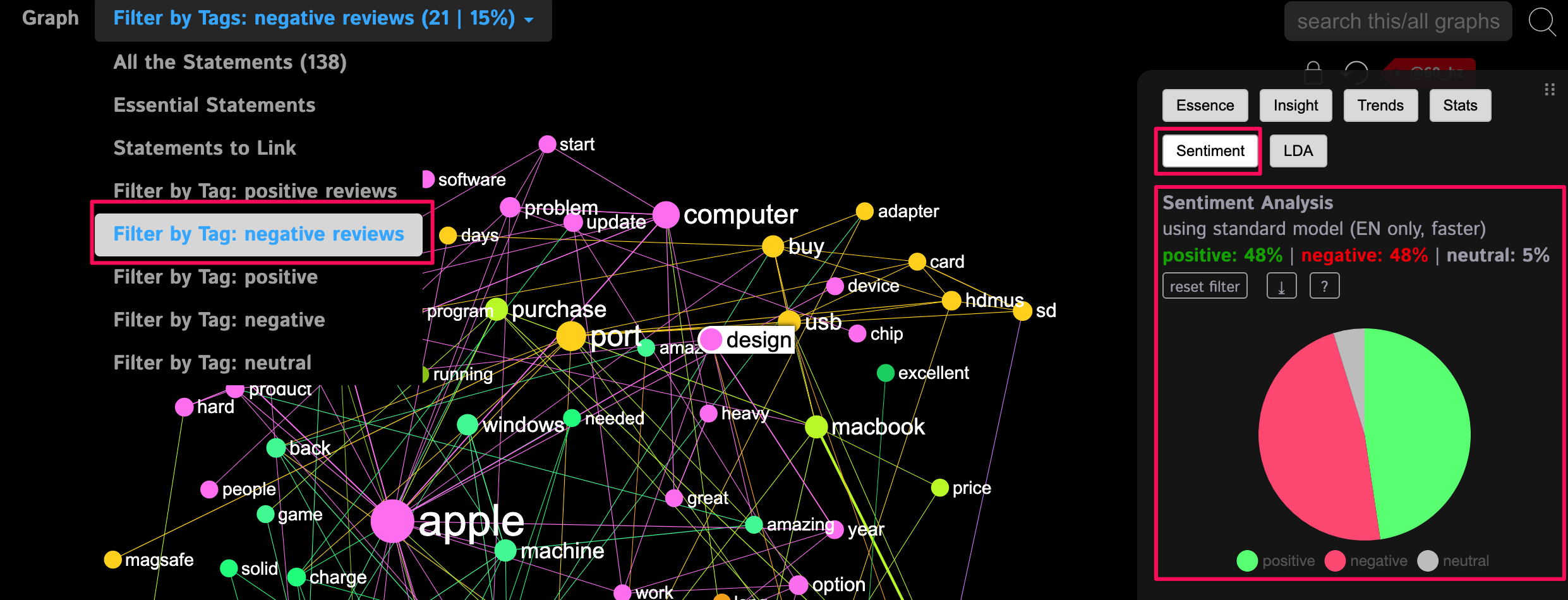

For AFINN, the results are not that good:

As we can see, half of Amazon’s negative reviews are marked as positive and half — as negative. The situation is better for the positive reviews (88% positive, 7% negative according to AFINN). One of the reasons could be that we categorize 3-stars reviews on Amazon as negative whereas on AFINN they should be categorized as neutral. However, there are only 10 (out of 97) reviews that have 3 stars, so in fact they should not affect our results that much. The verdict: AFINN somewhat works, but it doesn’t capture the nuance and only provides an approximate estimation of sentiment.

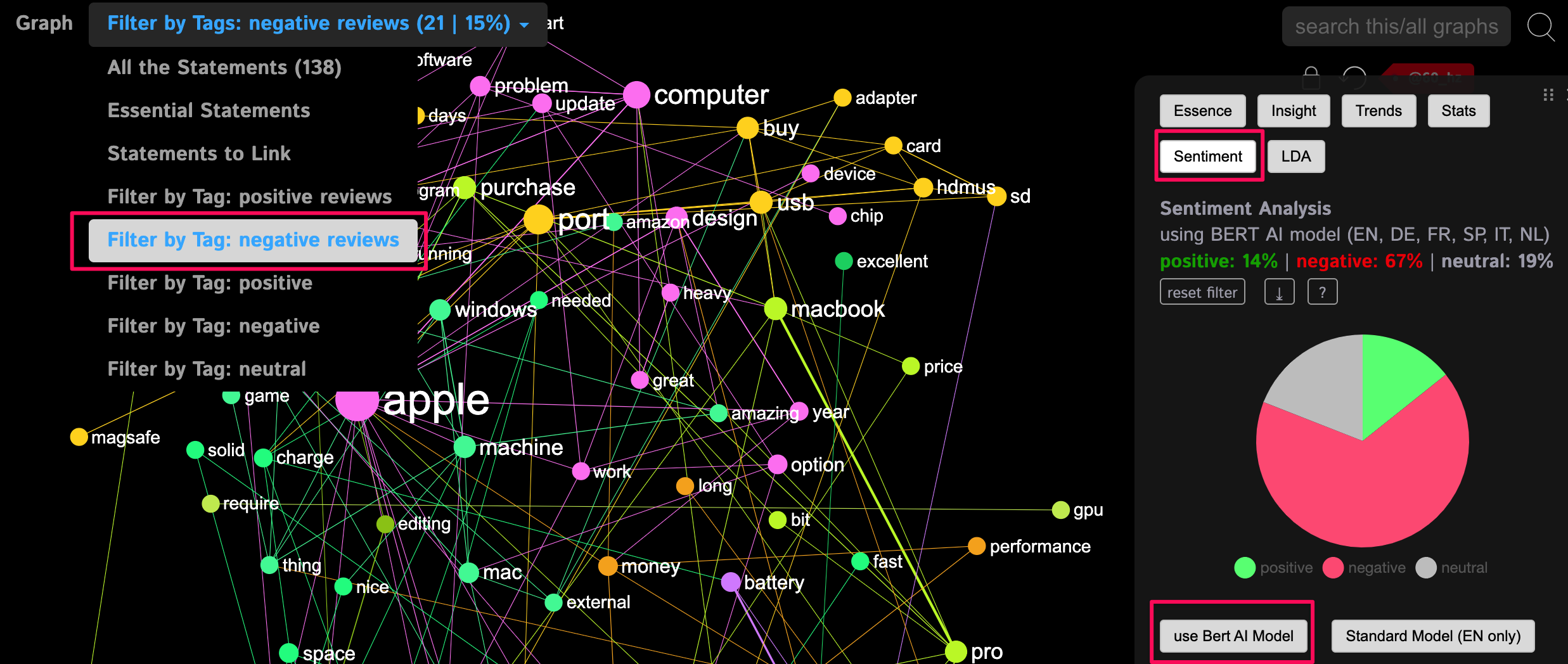

Now, let’s try to use the BERT AI machine-learning model for sentiment analysis. We will select this model in the Analytics > Sentiment panel and wait for the results (let’s run it on negative reviews to see the results):

As we can see, it marked only 14% of the 21 negative reviews on Amazon as positive (which is 3 to be precise). The rest are marked as negative or neutral. In fact, it looks like it “got” the actual 3-star reviews because there are in total 4 neutral reviews according to BERT (only 1 off), so the system is pretty precise! In fact, upon closer inspect of those “false negatives” — the “positive” reviews identified by BERT are actually quite good — it’s just that the users marked them with a low number of stars because they didn’t like 1 thing that was crucial for them, but they also wrote they like everything else.

As a result, we can now use BERT AI model to filter only the negative reviews, and see what people are saying when they talk negatively about the product (both according to Amazon and BERT AI):

We can now see the term “money” in the negative reviews, which means people complain about the price (something we didn’t immediately see using network analysis).

We also see that the “top notch” and the “screen” keywords are actually used in a negative contexts because people complain about the notch at the top of the screen that covers the view — something we didn’t identify during the initial analysis.

Conclusions

In this article, we demonstrated how you can use text network analysis, dictionary-based sentiment analysis models, as well as machine-learning based BERT AI to better understand the sentiment behind customer reviews using InfraNodus visual text analysis tool.

In the part 2 of this article we will demonstrate how this same approach can be used for social media discourse analysis using Twitter data from Kaggle.